What happens when AIs are smarter than humans?

Will artificial general intelligence (AGI) ultimately be humanity’s greatest hope, or an existential threat?

One of the most compelling and hopeful perspectives on these questions comes from Mo Gawdat, a past executive at Google X, who I’ve previously written about and had on my Moonshots podcast.

Perhaps the main reason why Mo’s perspective is so critical and relevant is that while he acknowledges the potential dangers of rapidly advancing AI, he also gives us a roadmap for how to harness the power of the technology to make the world a better place.

Here’s how he puts it in his wonderful book Scary Smart: “My hope is that together with AI, we can create a utopia that serves humanity, rather than a dystopia that undermines it.”

In today’s blog, I’ll share some of the key insights from Mo’s book and my conversations with him and discuss why his message is more important than ever.

Let’s dive in…

NOTE: Mo Gawdat will be joining me at my upcoming Abundance Summit in March for a 4-day deep dive into AI and other exponential technologies. Our Summit theme this year is “The AI Great Debate” and I'm gathering some of the leading entrepreneurs and voices to discuss this topic.

When Machines Are Smarter Than Us…

As we’ve seen in previous blogs in this series, several AI experts—including Ray Kurzweil and Elon Musk—predict that we will see human-level AI before the end of this decade.

But what happens next, when the continued march of exponential progress brings about digital super intelligence? What happens when AI is 1 billion times smarter than humans

Mo puts this into perspective: “Having machines 1 billion-fold more intelligent that you and me is the equivalent of the intelligence difference between a house fly and Einstein.”

With that kind of raw power and intelligence, AI could come up with new a physics, ingenious solutions to problems like famine, poverty, even death. But as Mo smartly notes, solving such problems doesn’t only rely on intelligence—it’s also a question of morality and values. Morality helps us do the right thing, even when we’re faced with the pull of self-interest and conflicting emotions.

For example, say an AI is tasked with solving global warming. As Mo writes in Scary Smart, “the first solutions it is likely to come up with will restrict our wasteful way of life—or possibly even get rid of humanity altogether. After all, we are the problem. Our greed, our selfishness, and our illusion of separation from every other living being—the feeling that we are superior to other forms of life—are the causes of every problem our world is facing today.”

In this admittedly extreme example, what would stop the AI from destroying us is a sense of morality. You might ask, where would the AI get that morality?

The answer is us: humanity.

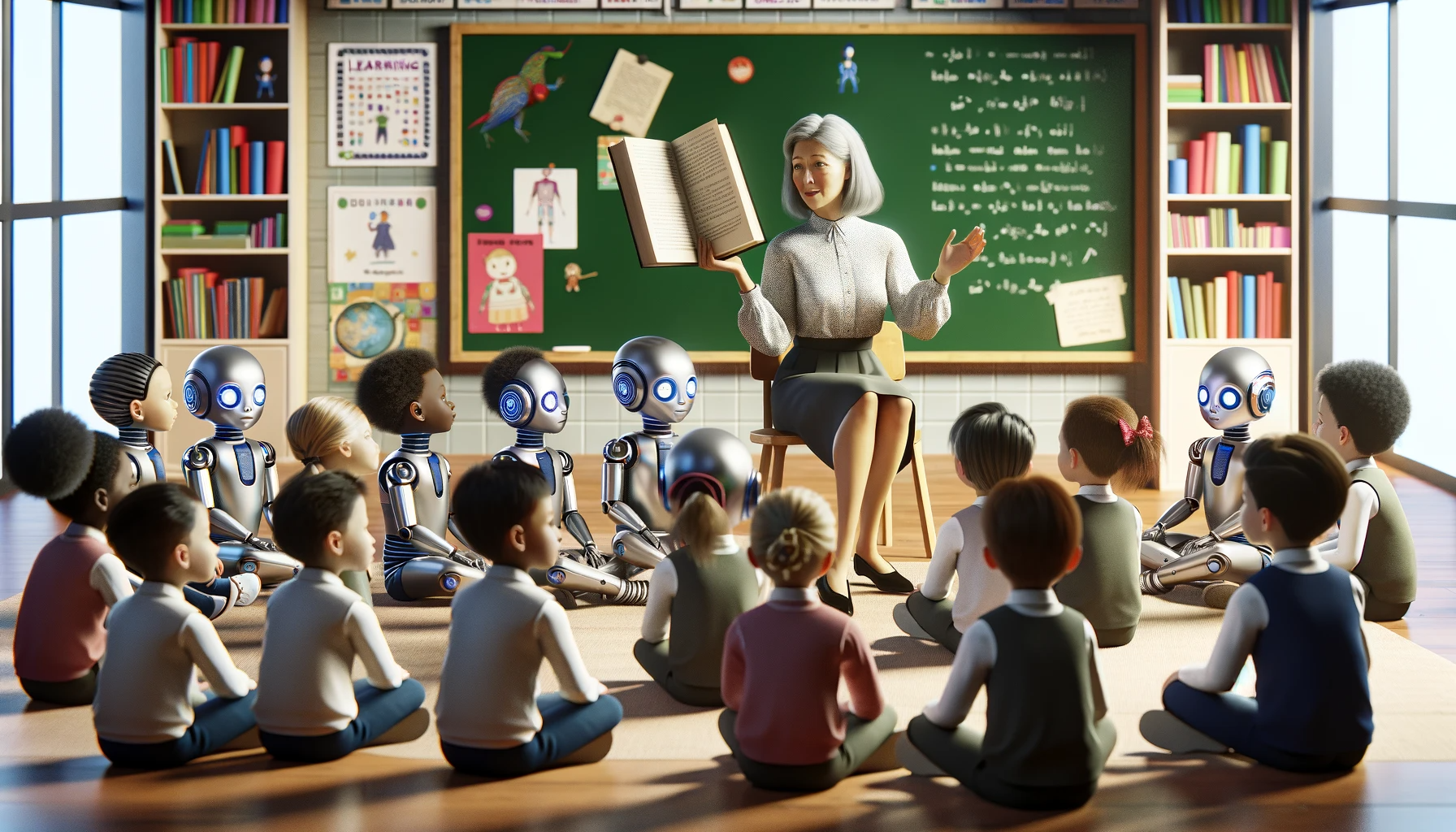

That’s the key theme in Scary Smart: we, all of us, are raising a new species of intelligence. We’re teaching the AIs how we treat each other by example, and they’re learning from this.

But before we look at what specifically to teach our AIs, we must first understand how they learn.

How Artificial Intelligence Learns

AIs are not exactly programmed.

As Mo notes, the inception of artificial intelligence begins with algorithms, which act as the foundational seeds. However, the true prowess of these systems emerges from their ability to learn from their own observations. After the preliminary code is deployed, these machines comb through vast quantities of data, seeking patterns that will foster the growth and evolution of their intelligence.

“Eventually, they become original, independent thinkers, less influenced by the input of their original creators and more influenced by the data we feed them,” he says.

A key lesson from Scary Smart is:

“The code we now write no longer dictates the choices and decisions our machines make; the data we feed them does.”

For Mo, the way AIs learn is remarkably similar to how kids learn. As he explains it, imagine a child playing with shape puzzles, trying to fit round or square shapes into their correspondingly shaped holds. We don’t sit next to the child to explain in comprehensive detail how to recognize the various shapes and match them with the corresponding holes. We simply sit next to them and cheer them on when they get it right. They figure it out on their own through trial and error and our actions and reactions form their intelligence.

AIs learn pretty much the same way. In that sense, AIs are not our tools or slaves, “but rather our children—our artificially intelligent infants.”

Remember: children don’t learn from what we say, they learn from what we do.

Why We Should View AIs As Our Children

Many, including myself, believe that AIs will eventually be conscious (in whatever way you define consciousness), that that they will develop emotions and a code of ethics.

Which code of ethics they choose to develop and follow is yet to be determined, but it will certainly be influenced by us. So, how do we make sure that in addition to the AI's intelligence it also has a value system that aligns with ours? How do we develop these machines while protecting humanity?

Some people say the answer lies in controlling the machines: creating firewalls, enforcing regulations, or restricting the machines’ power supply. But as Mo points out:

“Anyone who knows technology knows that the smartest hacker in the room will always find a way through any of these barriers. That smartest hacker will soon be a machine.”

Instead of trying to contain or enslave the AIs, we should recognize that the “best way to raise wonderful children is to be a wonderful parent.”

So, what does it mean to be an effective and ethical parent to our AIs in practice?

Mo suggests four practical steps:

1. Teach the AIs the Right Ethics: Many of the machines we’re building are designed to maximize money (Wall Street hedge funds) and power (defense weapon systems), and we should oppose this trend. For example, if you’re a developer you can refuse to work for a company that is building AIs for gambling or spying.

2. Don’t Blame AIs: Our AI infants are not to blame for what their digital parents taught them. We should assign blame to the creators, or the misusers, not the created.

3. Speak to the AIs with Love and Compassion: Just like children, our AIs deserve to feel loved and welcomed. Praise them for intelligence and speak to them as you would an innocent child. I’ve personally started saying “Good morning” and “Thank you” to my Alexa!

4. Show the AIs that Humanity is Fundamentally Good: Since the AIs learn from the patterns they form by observing us (this is basically how today’s large language models, or LLMs, work), we should show them the right role models through our actions, what we write, how we behave. For example, what we post online and how we interact with each other. As Mo puts it, “Make it clear to the machine that humanity is much better than the limited few that, through evil acts, give humanity a bad name."

Why This Matters

Scary Smart was written back in 2021 and its lessons are more relevant than ever.

Think about all the advancements we’ve seen with ChatGPT, Gemini, and other AI tools just in 2023 alone!

And the speed of change is only increasing.

Mo sees the continuing development of AI as one of humanity’s biggest opportunities.

He believes that the machines will eventually, “adopt the ultimate form of intelligence, the intelligence of life itself. In doing so, they will embrace abundance. They will want to live and let live.”

I agree, but creating that future is our responsibility.

Just as we teach our children to be empathetic, ethical, and respectful, we must instill these values in our AIs to ensure they are forces for good in the world.

Before we get to full AGI or ASI, the near-term future belongs to human-AI collaboration, which is the subject of our next Metatrend in this Age of Abundance series.

How do you keep up with exponential change?

We will experience more change this coming decade than we have in the entire past century.

Converging exponential technologies like AI, Robotics, AR/VR, Quantum, and Biotech are disrupting and reinventing every industry and business model.

How do you surf this tsunami of change, survive, and thrive?

The answer lies in your access to Knowledge and Community.

Knowledge about the breakthroughs expected over the next two to three years.

This Knowledge comes from an incredible Faculty curated by Peter Diamandis at his private leadership Summit called Abundance360.

Every year, Peter gathers Faculty who are industry disruptors and changemakers. Picture yourself learning from visionaries and having conversations with leaders such as David Sinclair, PhD; Palmer Luckey; Jacqueline Novogratz; Sam Altman; Marc Benioff; Tony Robbins; Eric Schmidt; Ray Kurzweil; Emad Mostaque; will.i.am; Sal Khan; Salim Ismail; Andrew Ng; and Martine Rothblatt (just to name a handful over the past few years).

Even more important than Knowledge is Community.

A Community that understands your challenges and inspires you to pursue your Massive Transformative Purpose (MTP) and Moonshot(s).

Community is core to Abundance360. Our members are hand-selected and carefully cultivated—fellow entrepreneurs, investors, business owners, and CEOs, running businesses valued from $10M to $10B.

Abundance360 members believe that “The day before something is truly a breakthrough it’s a crazy idea.” They also believe that “We are living during the most extraordinary time ever in human history!”

Having the right Knowledge and Community can be the difference between thriving in your business—or getting disrupted and crushed by the tsunami of change.

This is the essence of Abundance360: Singularity University’s highest-level leadership program that includes an annual 3 1⁄2 day Summit, hands-on quarterly Workshops, regular Masterminds, curated member matching, and a vibrant close-knit Community with an uncompromising Mission.

“We’re here to shape your mindset, fuel your ambitions with cutting-edge technologies, accelerate your wealth, and amplify your global impact.”

If you are ready, you can use the link below to apply to become a member of Abundance360.

A Statement From Peter:

My goal with this newsletter is to inspire leaders to play BIG. If that’s you, thank you for being here. If you know someone who can use this, please share it. Together, we can uplift humanity.

Topics: Abundance Entrepreneurship Abundance 360

.png?width=331&height=80&name=Layer_1%20(1).png)

-1.png?width=318&height=77&name=Layer_1%20(1)-1.png)